Lab Updates:

Welcome to the Lab

This page serves as the continuous integration and testing sandbox for any enterprise-grade networking, virtualization and security configurations. My goal is to simulate real-world infrastructure challenges to test my own skills in system administration, network segmentation and any deployments.

- Hybrid Networking: Managed via a UniFi Cloud Gateway Ultra utilizing VLAN segmentation to isolate VLAN segmentation, IoT, guest network, management, home connected devices and production traffic.

- Virtualization & computing: Running a high availability environment such as Proxmox VE to host services such as Docker containers, Pi-hole, Game servers, PXE host servers & Windows 10 Enterprise guest VMs

- Security First Design: By using VLANs to segment my network traffic I'm ensuring safety to all network users, as game servers are port-forwarded to the outside world security is a massive consideration, I have thought of this and decided to go with Ubiquiti's Unifi line of devices for my home network, this will help me ensure proper port-forwarding, proper home firewall & proper VLANs.

22nd of January 2026: Scoring the HP Prodesk

The Journey began with finding a cheap workstation on Facebook Marketplace the specs were really good for the price it was a HP ProDesk 600 G3 with an i7-7700, 16GB of DDR4 running at 3200MHz and a 256GB SSD.

23rd of January 2026:

I reached out to the seller, got a response and decided to purchase a TP-Link 8 port easy smart switch on Amazon, this would be the backbone to getting everything to work, it was very fitting as just last yr I installed 2x TP-Link X50 Decos as the new main router within the house, little did I know this was just the start of the rabbit hole.

24th of January 2026: Bare metal: Transitioning to Proxmox & Linux CLI

I picked up the workstation from the seller, I then decided to install Proxmox VE onto the workstation, this SFF workstation became the main node. I went and installed Ubuntu server straight on the device, being familiar with windows command line is one story but being familiar with a linux distro's command line was another thing. As I'm more of a GUI admin I installed portainer straight after and the two things I did was setup a Minecraft Java server and Pi-hole DNS server. At first Pi-hole didn't work and I needed to keep researching on how to correctly configure it, but the minecraft server was working correctly within my local IP as it wasn't port-forwarded yet.

25th of January 2026:

The day I finally figured out how to get Pi-hole working correctly on my network, the issues that made Pi-hole not run the day prior was not having the correct directories, so it was back to SSH into my ubuntu command line and using the simple mkdir command to create these directories and then like magic it worked. I also figured out how to port forward my Minecraft server, the way I did this was very simple. I created a DDNS through the TP-Link deco app and named it specifically to be lozmcservers.tplinkdns.com I didn't like the naming scheme much so I decided to buy a domain off cloudflare and CNAME the DDNS so I could have a custom domain.

27th of January 2026:

I went into work and decided to tell the ICT manager what I have been up to recently and how I'm learning system administration and have my own server setup at home, he then asked me if I'd like to take home a free server he never ended up using, little did I know this was a massive powerhouse later down the line.

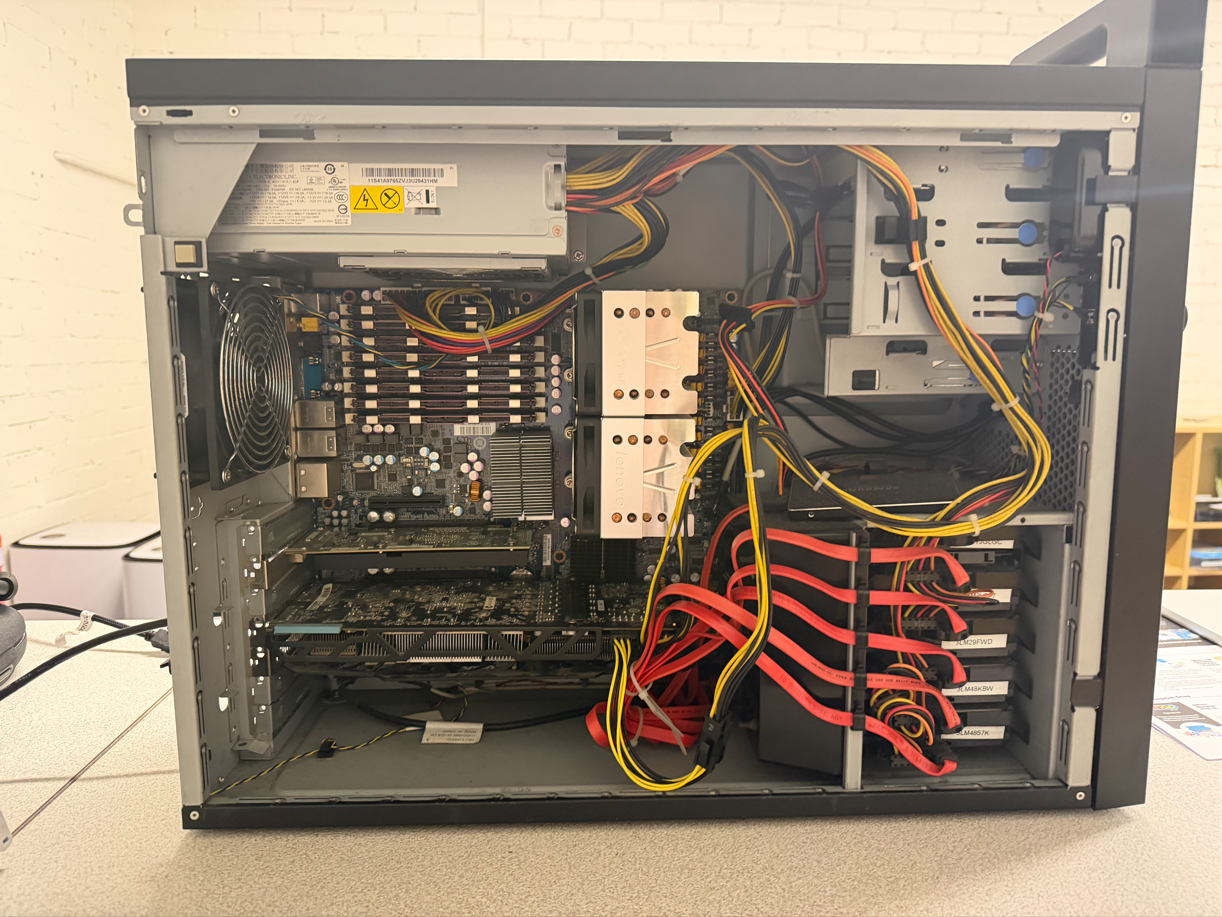

28th of January 2026: The powerhouse arrival: Dual-Socket Xeon Beast

I collected the server from the ICT manager and checked out the server while I was at work, I was surprised it was a dual socket Xeon Lenovo ThinkStation D10 from around 2009, specs were 2x Xeon X5460 CPUs, 10GB of DDR2 ECC ram running at 600MHz, 5 SAS hard drives and a Samsung 860 Pro 256GB as the boot drive. This server was fully wiped of anything minus the remaining install of Windows Server possibly due to the security screen? As I didn't think of using Windows Server at the time for myself I created a Proxmox VE boot DVD at home and fully wiped anything off of that machine and then clustered it with my other node, this would become a nightmare as my initial idea was to run this server as a PBS (Proxmox VE backup server) with raid 10 redundancy in mind and this server's PSU was rated at 1000W compared to my other Proxmox VE node which had a 180W Platinium PSU, this one was loud and pulled so much power due to the Nvidia Quattro and 2 Xeons installed which alone had a TDP of 120W per CPU, so this server would stay off unless I wanted to backup my first node.

29th of January 2026: The VPN Struggle: Wireguard vs Tailscale

I decided to give installing Wireguard a shot before I started work, this didn't go to plan at all I thought it would originally take me 15 minutes max, turns out the conclusion I came to was my ISP blocked UDP port forwarding port 51820 which it needed, I then attempted to install Tailscale which I knew used Wireguard as the backend, this took me about 10 minutes and it worked straight away.

Then after work I spent about 3 hours after work trying to get PBS working on the ThinkStation however all my attempts would straight up fail as I was still learning Proxmox and didn't correctly setup backups so the storage destination was never found even though the server was on, this really pulled me down and I couldn't figure it out at all. I then decided I'd just create a backup schedule on my other node which would keep a few days of backups incase I needed to rollback anything. The next future thing would be buying a QNAP Nas or UniFi UNAS Pro 4 and putting 20TB Seagate Firecuda drives and running that as RAID 10 for redunancy and using that for Immich to backup all photos to my 'cloud'

30th of January 2026:

After work I took out one of the SAS drives in the server and use that as the temporary backup drive within the first Proxmox node, this failed as at the time I didn't research that SAS to SATA didn't work and doesn't just magically work, so I heard the drive platters startup when the power was turned on but straight away it would stop spinning up, I did my 5 minutes of research and then found it wasn't possible so I took the drive out and put it back in the ThinkStation D10.

7th of February 2026:

I decided to take a break from everything and do some more research, I decided it would be better to take some days off thinking of the setup and mentally refresh myself, I was talking to my Uncle and we were talking about his server setup at home, he was showing me how he runs his home-lab and the infrastructure behind UniFi and how awesome they are. I originally started doing my researching into UniFi's line of products and how everything was very simple to use and the big seller was remote management anywhere, VLAN support and dedicated IoT network. I did some research that night into Cloud Keys and the Cloud Gateway Ultra, which had the expensive cloud key built into it already. As luck had it my local JW Computers had the Cloud Gateway Ultra on sale and I decided to do more research.

8th of February 2026: The Network Brain Surgery: Migrating to UniFi

I decided that I would go and purchase the Unifi Cloud Gateway Ultra at the time I paid $189 so I got a good $40 off. Little did I realise this would take me from the surface of the rabbit hole only deeper and deeper. It took me about 30 minutes to turn of the original Deco X50's and put them into AP mode which then made the whole internet black out, that's all good I thought so I plugged in the Cloud Gateway Ultra and it started to download the OS which was great, luckily with the speeds we get from our ISP the download was pretty fast took about 10 minutes to complete. But I ran into my first hurdle I needed to create a UniFi account and get an MFA code, which we had no WiFi so there was an issue I needed to go outside and hold my phone into the sky for a solid 2 minutes and pray I got a code, luckily I did and put it into the app, luckily it worked and I was able to continue with setup, it tested the ISP speeds and we were getting exactly what we pay for, I completed setup and plugged in the Deco's as they were in AP mode already, I was thinking to myself how would I explain I changed the routers over and needed to put a new SSID into each device we had, funnily enough I connected to the network again on my phone, then my personal laptop did and then I tried pinging outside the network and it worked. I still don't understand how the UniFi Cloud Gateway took the SSID from the Deco's and everything connected back up, but I wasn't complaining at all.

Now for the fun part which was telling my friends the Minecraft server would be offline for an hour, I had to learn how to change my main Proxmox node's static IP and gateway, due to Deco and UniFi both using different subnets and IP formats I needed to change it, originally it was a 192.168.68 address which I think Decos use to ensure no conflicts occur with any routers if they are already running within the network as most routers will snatch 192.168.1.1, I then had to change the IP to be a 192.168.1 address and the subnet accordingly. This worked but I was wondering why didn't my IP show on my UniFi app, I finally found my first node with a completely different IP, of course this new IP I set would work but due to switching routers every single DHCP reservation I had reset, so I than changed the IP accordingly with the IP shown in the UniFi app so it was easier to track any traffic.

I then had to create a DuckDNS and create a script on my first node to automatically update my Public IP, due to us having a Dynamic Public IP which I wasn't going to pay my ISP $15 extra per month to be a Static Public IP. I then port forwarded the Minecraft port and changed the CNAME record and everyone was back on the Minecraft server with the same IP as before.

10th of February 2026:

I thought of the idea why not install Windows Server 2019 and learn how to create a DC, GPO's and Users within my home network, that was a fun idea from the outside but when you have a dinosaur as your second server it becomes harder. I decided the easiest way was to create a PXE server and install the ISO via Ethernet which would've worked out to be faster however my attempts at getting the ThinkStation to boot PXE didn't work, I believe this to be an issue internally and a connection between the first Proxmox node and the ThinkStation wasn't communicating correctly, due to receiving an IP address and MAC address which wasn't the Proxmox node at all.

11th of February 2026: Ghost in the machine: Deploying Windows Server 2019

I pondered on the idea of taking out the SSD and using a Thinkpad at work to install Windows Server 2019 straight onto the SSD, thankfully this worked and I was able to wipe Proxmox and install it straight on the SSD, I went home and put it back into the server and it was checking the hardware for a few minutes and booted straight into Windows Server 2019 with updated hardware, I was surprised at first it worked due to the ThinkStation having only DDR2 but it still worked pretty well. I promoted the ThinkStation to be the domain controller and created some Users, GPO's and policies with AD DS and Group Policy Management Console (GPMC). I realised I had an issue with Proxmox, my old node was still there and I needed to delete it and more importantly take everything out of the cluster. Luckily this was simple as I found the commands from someone who had a node which previously broke and needed to be removed from the cluster.

13th of February 2026:

I created a Windows 10 Enterprise VM install on my First Proxmox node, as both my servers run without displays I needed to RDP into both of them so at one point I had both of my PC displays with 2 different RDP sessions running and I had figure out how to join my Domain on that Enterprise install, this was very simple and I was able to add it into the Domain and then classify where the computer was within my Domain, as I have many different OU's setup I just moved the VM into the correct OU. Which was Downstairs or Upstairs and those both contained, users, computers, roles, groups and a few other OU's within them as well.

14th of February 2026: The "Lack-Rack" Revelation: Aesthetic Infrastructure

I decided the current setup needed a small makeover, I went to IKEA and purchased a LACK side table, anyone who works within ICT will know about the infamous LACK server rack, I decided not to pursue that idea but to pursue the idea of making the setup more clean and less messy. This turned out to be a good idea as it only cost me $12 but makes the setup look and feel more clean.

To be continued...

Current Server Setup:

As I haven't had to chance to discuss how the current setup has been achieved I will dedicated this section. I currently have the first Proxmox Server running upstairs connected back into the TP-Link switch from before this then piggybacks into a Deco X50 which is running wirelessly, sounds crazy but it actually works and nothing is currently running is bottlenecked with that setup, the only issue with that is if any VLANs are setup it is very likely they will be stripped and will not work correctly. This feeds into the reason why the ThinkStation stays connected directly to the router for now (until I can purchase a server rack and UniFi managed switch to mount within the rack).